The same applies to other modules or libraries e.g. Zip the content of the project-dir directory)

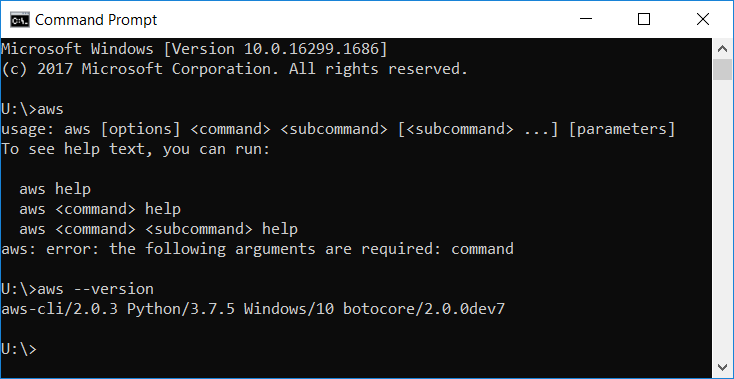

Aws postgresql table csv install#

Install any libraries using pip at the root level of the directory. py files) at the root level of this directory. Save all of your Python source files (the. Secondly I followed all the steps in the documentation: I don't know where is the suffix '_psycopg' from I got the error info: Unable to import module 'myfilemane': No module named 'psycopg2._psycopg'Īt first I have not imported the module "psycopg2._psycopg" but "psycopg2".

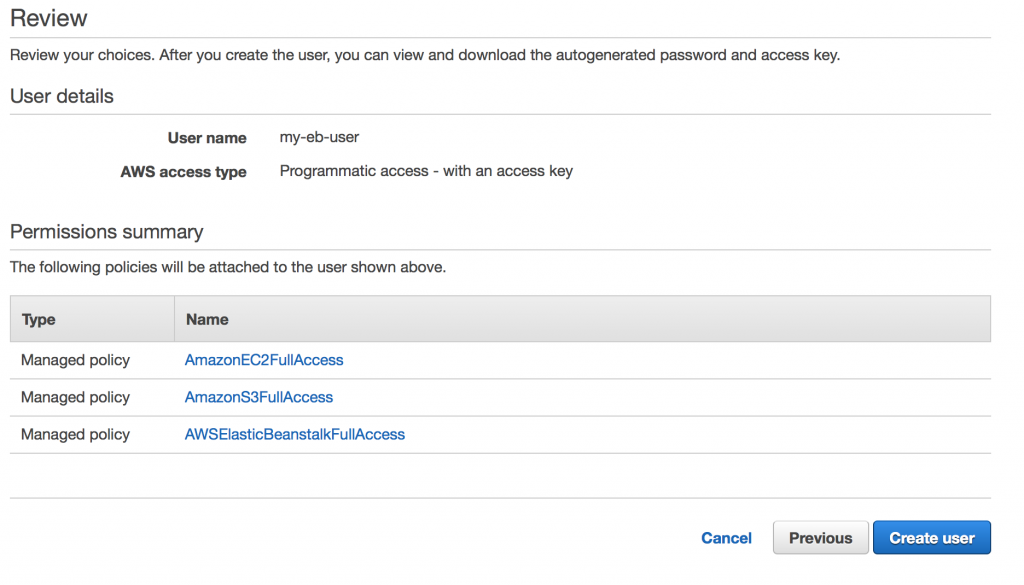

When I tried to import psycopg2 import psycopg2 I can't import necessary python libraries e.g. S3.Object('bucketname','filename.csv').download_fileobj(buf) You can use any delimiter for the output. A file named report1.csv will be created on your local machine in the C:\tmp folder. \copy (SELECT from tablename) to 'C:\tmp\report1.csv' with CSV DELIMITER '' HEADER. In that S3 bucket, include a JSON file that describes the mapping between the data and the database tables of the data in those files. Below is the command to export the result set of a select statement to a csv file with pipe delimiter and a header. To do this, provide access to an S3 bucket containing one or more data files. For this post, you create a test user with the least-required permission to export data to the S3 bucket. AWS DMS (S3 as a source) AWS DMS can read data from source S3 buckets and load them into a target database.

I can read csv-file via the following code import csv, io, boto3Ĭlient = boto3.client('s3',aws_access_key_id=Access_Key,aws_secret_access_key=Secret_Access_Key) To export your data, complete the following steps: Connect to the cluster as the primary user, postgres in our case.By default, the primary user has permission to export and import data from Amazon S3. You can also run the batchfile on your computer and send the content of the. via lambda function I have connected with Amazon S3 Buckets and Amazon RDS Just put the batchfile in the same folder where the csv-files are: for copy from local machine to local database: for f in (.csv) do psql -d yourdatabasename -h localhost -U postgres -p 5432 -c '\COPY public.yourtablename FROM 'dpnxf' DELIMITER ',' CSV ' pause.

I have uploaded a csv-file into Bucket on Amazon S3.I have created new tables in PostgreSQL-Database on Amazon RDS.

0 kommentar(er)

0 kommentar(er)